Python Matrix Operations Gpu

My matrices are dense mostly random and for loops are compiled with cython. Import numpy as np m_size 1000 sim_length 50 a nprandomrand m_size m_size b nprandomrand m_size m_size for j in range sim_length.

Easy dense matrix manipulation Useful to perform matrix ops on GPU Perform many matrix operations multiplication and transpose Elementwise addition subtraction multiplication and division Elementwise application of exp log pow sqrt.

Python matrix operations gpu. CPU 5 e timetime printfCPU time. GPU 5 cpcudaStreamnullsynchronize to let the code finish executing on the GPU. Import numpy as np import cupy as cp import time Numpy and CPU s timetime A nprandomrandom1000010000.

To add two matrices you can make use of numpyarray and add them using the operator. The vectorize decorator takes as input the signature of the function that is to be accelerated along with the target for machine code generation. Thus running a python script on GPU can prove out to be comparatively faster than CPU however it must be noted that for processing a data set with GPU the data will first be transferred to the GPUs.

B nprandomrandom1000010000 CPU npmatmulAB. Prange combined with the Numba haversine function yielded a 500x increase in speed over a geopy Python solution 6-core12-thread machine A Numba CUDA kernel on a RTX 2070 yielded an additional 15x increase in speed or 7500x faster than the geopy Python. I think the most used libraries for sparse matrix operations using CUDA is cuSPARSE which already comes included in the CUDA toolkit and supports all common sparse matrix formatsThere is a Python wrapper for it here.

It does this by compiling Python into machine code on the first invocation and running it on the GPU. S timetime x_gpu 5. In Python we can solve the different matrix manipulations and operations.

If you are gonna perform only one iteration on the matrix its always better to do it on the CPU rather than doing it on a GPU. CpcudaStreamnullsynchronize e timetime print e - s In this case Numpy performed the process in 149 seconds on the CPU while CuPy performed the process in 00922 on the GPU. It is accelerated with the CUDA platformfrom NVIDIA and also uses CUDA-related libraries including cuBLAS cuDNNcuRAND cuSOLVER cuSPARSE and NCCL to make full use of the GPUarchitecture.

Like all matrix operations that we can perform using the list method on Python matrix same we can perform the same by importing the Numpy library in our Python program. This is because you need to copy the matrix data from CPU memory to GPU memory and copy back the results from the GPU to CPU. The matrix operation that can be done is addition subtraction multiplication transpose reading the rows columns of a matrix slicing the matrix etc.

The all-matrix operations using the Numpy library can be performed with numpyarray function. In this case cuda implies that the machine code is generated for the GPU. Matrix Operations using Numpy Library on Python Matrix.

Define a function that takes in two inputs. GPU Accelerations in Python 1 Graphics Processing Units introduction to general purpose GPUs data parallelism 2 PyOpenCL parallel programming of heterogeneous systems matrix matrix multiplication 3 PyCUDA about PyCUDA matrix matrix multiplication 4 CuPy about CuPy Scientific Software MCS 507 GPU Acceleration in Python L-11 20 September 2019. It depends upon the size of the matrix and number of iterations that needs to be performed.

Subtract subtract elements of two matrices. To multiply them will you can make use of the numpy dot method. CuPys interface is highly compatible with NumPy.

Now lets do matrix multiplication in pure python. CuPy1is an open-source library with NumPy syntax that increases speed by doingmatrix operations on NVIDIA GPUs. My naive guess would be that I.

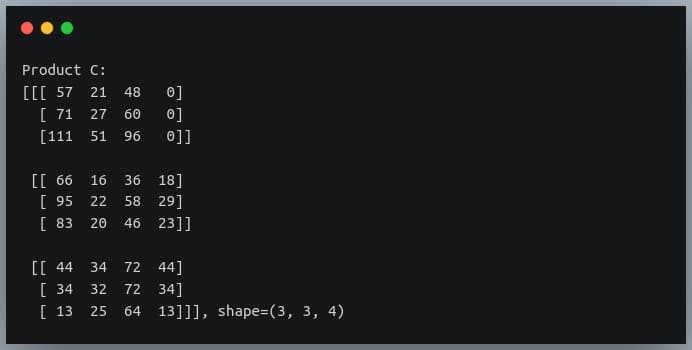

OK the two fastest curves on the right correspond to the ones plotted in the first figure in. Break the shape of each input into the rows and columns. One of the operations he tried was the multiplication of matrices using npdot for Numpy and tfmatmul for TensorFlow.

A more modest but still great 1616X speedup. Add add elements of two matrices. GPUs have more cores than CPU and hence when it comes to parallel computing of data GPUs performs exceptionally better than CPU even though GPU has lower clock speed and it lacks several core managements features as compared to the CPU.

Result npdot ab Note. Numpy Module provides different methods for matrix operations. Execution time for matrix multiplication logarithmic scale on the left linear scale on the right.

We can do this by leveraging for loops. Running Python script on GPU. Accelerating python cudamat provides a CUDA-based python matrix class Primary goal.

Overall the PythonCUDA ecosystem still seems weirdly fractured with no obvious choice existing for many common tasks. D cprandomrandom1000010000 GPU cpmatmulCD. 2f CuPy and GPU s timetime C cprandomrandom1000010000.

Assert that the columns of the first input equal the rows of the second input as we saw above that matrix multiplication is done by turning the second input. Numba exposes easy explicit parallelization with prange for independent operations. It will take the following steps.

In most cases itcan be used as a.

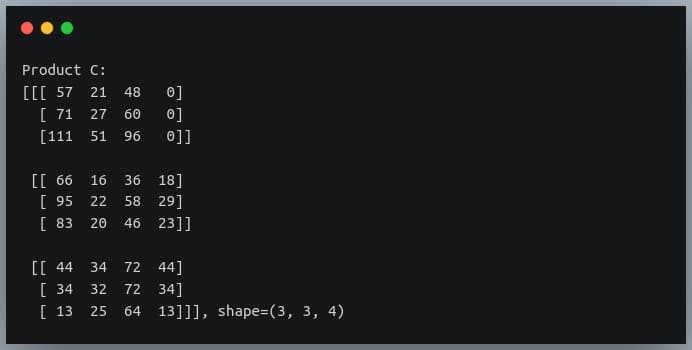

Cuda Python Matrix Multiplication Programmer Sought

Simple Matrix Multiplication In Cuda Youtube

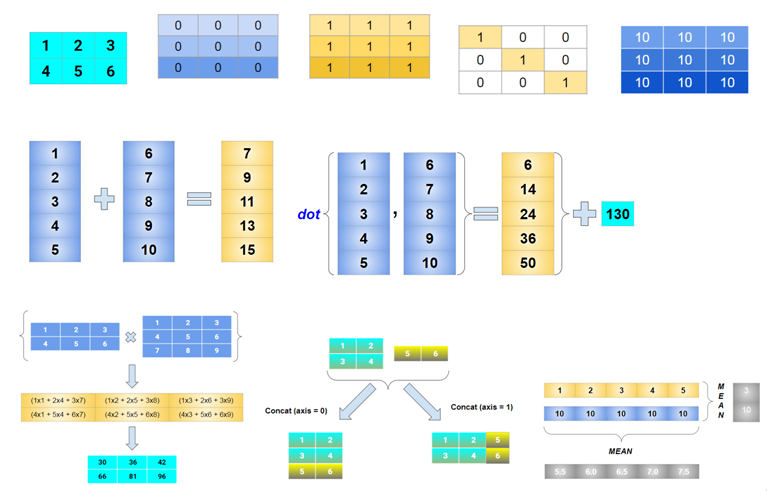

Neural Networks Tutorial Deep Learning Matrix Multiplication Tutorial

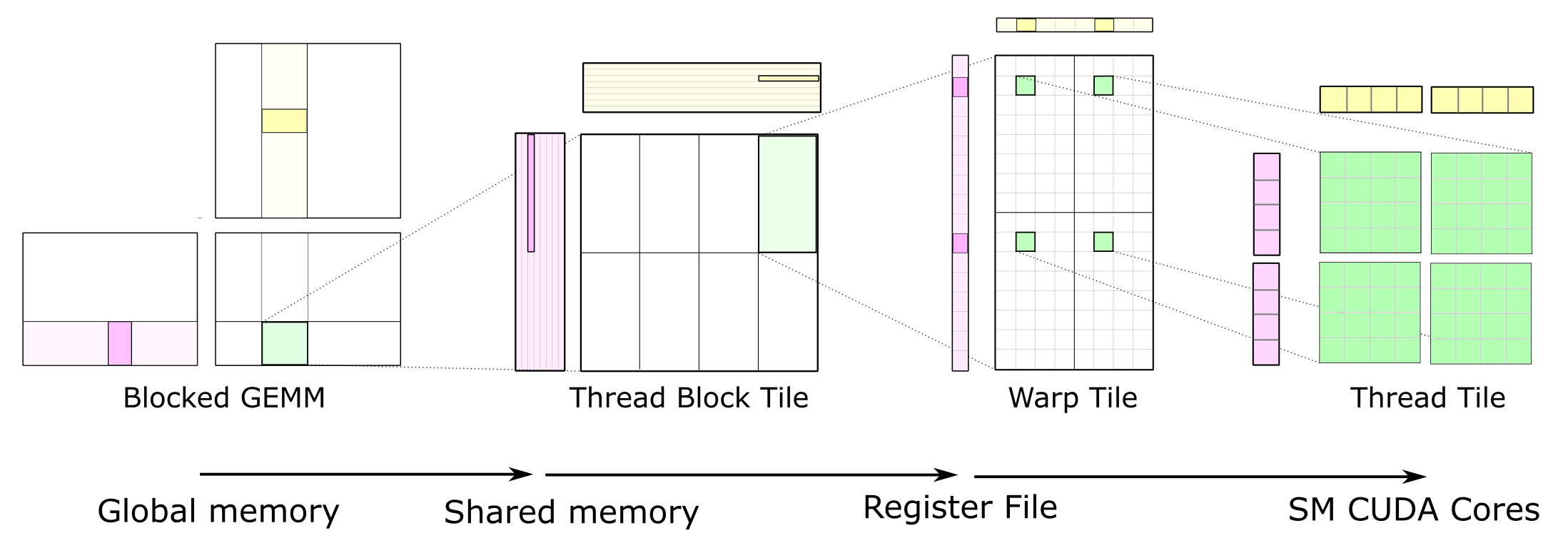

Cutlass Fast Linear Algebra In Cuda C Nvidia Developer Blog

Visual Representation Of Matrix And Vector Operations And Implementation In Numpy Torch And Tensor Towards Ai The Best Of Tech Science And Engineering

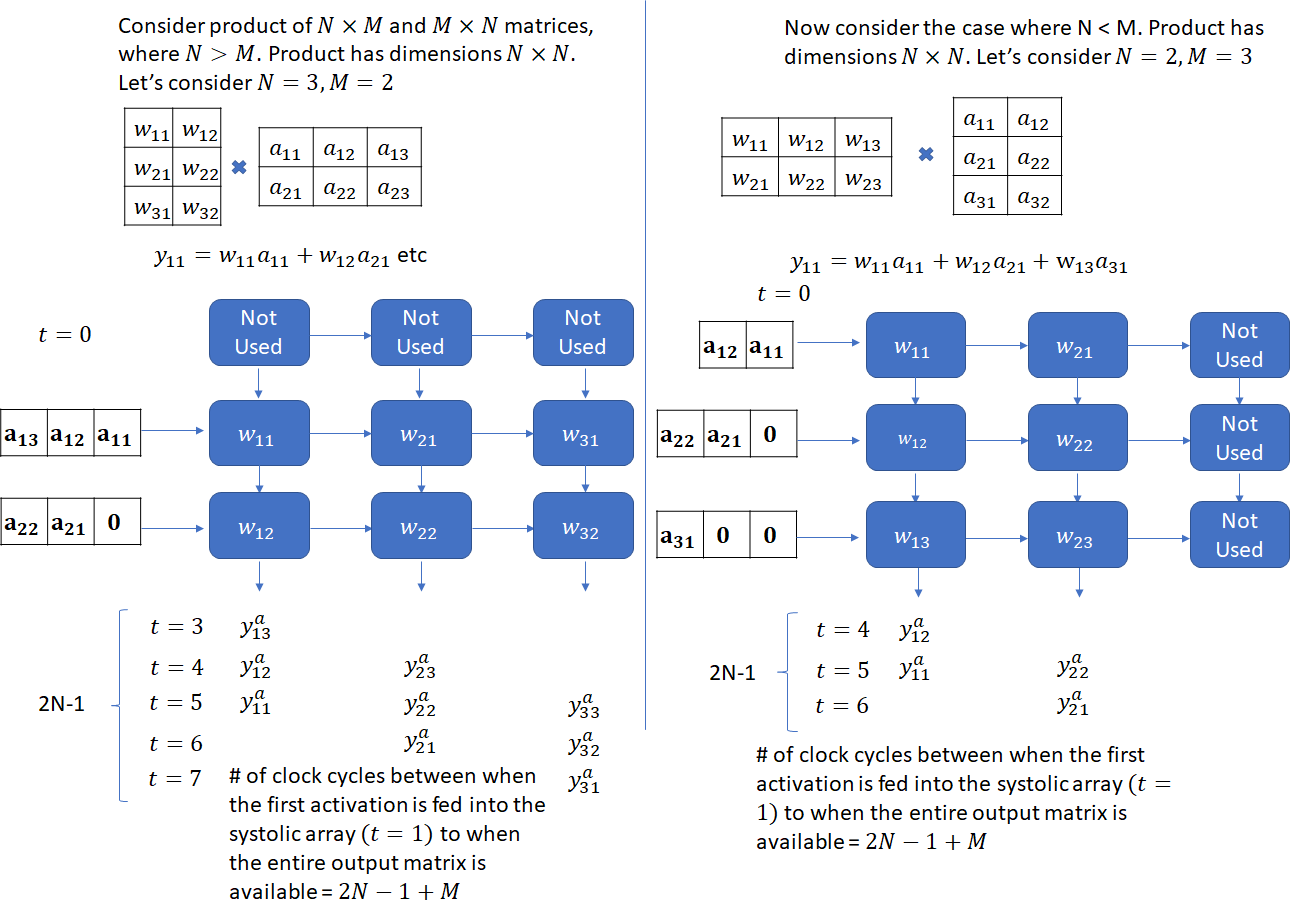

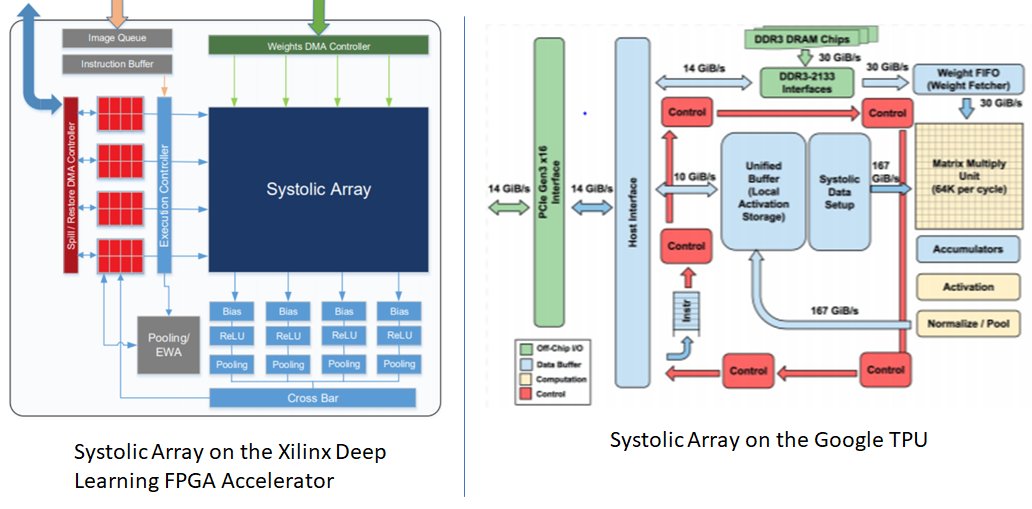

Understanding Matrix Multiplication On A Weight Stationary Systolic Architecture Telesens

Understanding Matrix Multiplication On A Weight Stationary Systolic Architecture Telesens

Matrix Multiplication An Overview Sciencedirect Topics

20 Examples For Numpy Matrix Multiplication Like Geeks

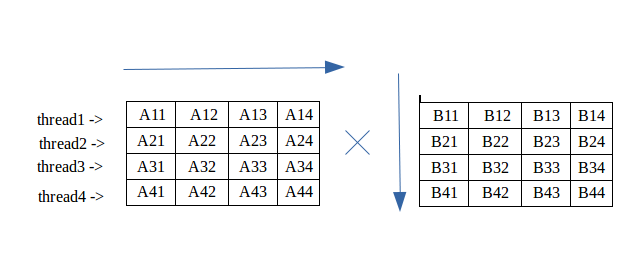

Multiplication Of Matrix Using Threads Geeksforgeeks

A Shallow Dive Into Tensor Cores The Nvidia Titan V Deep Learning Deep Dive It S All About The Tensor Cores

Cuda Python Matrix Multiplication Programmer Sought

Matrix Multiplication On Cpu Numpy And Gpu Gnumpy Give Different Results Stack Overflow

Sparse Matrix Multiply Assembly Kernel The Compute Kernel Is In Figure Download Scientific Diagram

Introduction To Cuda Lab 03 Gpucomputing Sheffield