Multiply Matrix Kernel

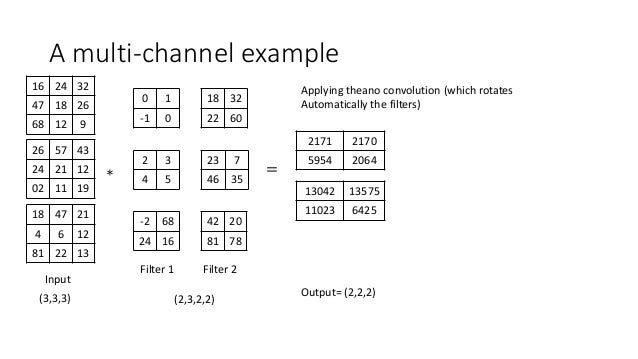

Const int newID1 get_group_id0TRANSPOSEX ty. With the boundary condition checks the tile matrix multiplication kernel is just one more step away from being a general matrix multiplication kernel.

Opencl Matrix Multiplication Sgemm Tutorial

AB C is an m p matrix.

Multiply matrix kernel. Multiplication of an identity matrix by itself. As a result of multiplication you will get a new matrix that has the same quantity of rows as the 1st one has and the same quantity of columns as the 2nd one. Provides several routines for multiplying matrices.

0 B B. The matrix multiplication kernel variations described in this section use execution policies to express the outer row and col loops as well as the inner dot product loop using the RAJA kernel interface. Multiplication of dense matrix by its transpose.

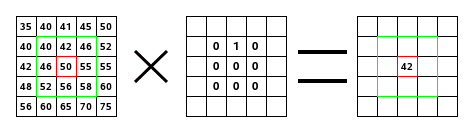

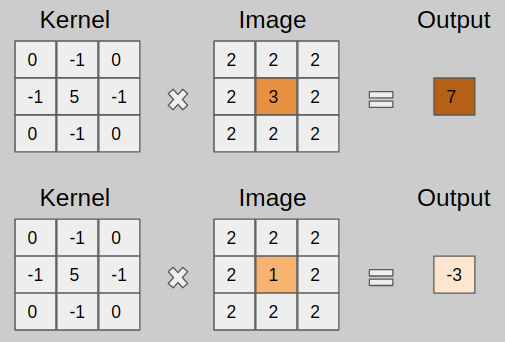

For example in the image below the output value 55 is calculated by the element-wise multiplication between the 3x3 part of the input. 1 C C A. Y block_size_y threadIdx.

Matrix multiplication Matrix inverse Kernel and image Radboud University Nijmegen Matrix multiplication For A an m n matrix B an n p matrix. The output of that is again. For example you can perform this operation with the transpose or conjugate transpose of.

0 B b j1. Its essentially just a multiplication times a matrix and we know that all linear transformations can be expressed as a multiplication of a matrix but this one is equal to the matrix the matrix one three to six times whatever vector you. Routine can perform several calculations.

I sum a row n i b i k col. Writefile matmul_naivecu define WIDTH 4096 __global__ void matmul_kernel float C float A float B int x blockIdx. The most widely used is the.

Store the transposed result coalesced if newID0 Q newID1 P. To do the MNK multiplications and additions we need MNK2 loads and MN stores. Definition of kernel of a transformation.

In general matrix multiplication is defined for rectangular matrices. __global__ void gpu_matrix_mult float a float b float c int m int n int k int row blockIdxy blockDimy threadIdxy. Step 2 You multiply each subimage values with the values of the convolution component wise.

The main condition of matrix multiplication is that the number of columns of the 1st matrix must equal to the number of rows of the 2nd one. For int k 0. This operation adds all the neighboring numbers in the input layer together weighted by a convolution matrix kernel.

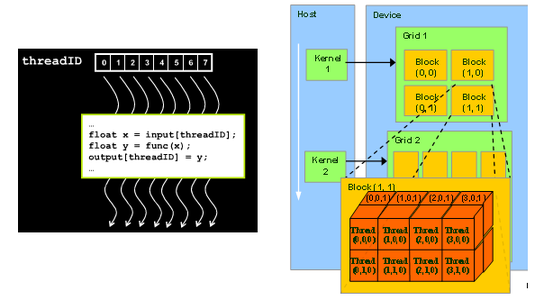

For naive matrix multiply 2 on board. Now lets see what the new matrix-multiplication kernel looks like. Provides several routines for multiplying matrices.

Routine which calculates the product of double precision matrices. Since the multiplications and additions can actually be fused into a. The main reason the naive implementation doesnt perform so well is because we are accessing the GPUs off-chip memory way too much.

Currently our kernel can only handle square matrices. 1 Answer1 Step 1 You slide a window of size 553 over your RGB image carving out subimages of that size. Float sum 0.

Fortunately our kernel can be easily extended to a general matrix multiplication kernel. B jn 1 C A 0 B B. 2n2data 2n2flops These are examples of level 1 2 and 3 routines inBasic LinearAlgebra SubroutinesBLAS.

Spring 2016 Matrix Calculations 10 43. Float sum 00. Int col blockIdxx blockDimx threadIdxx.

Execute the following cell to write our naive matrix multiplication kernel to a file name matmul_naivecu by pressing shiftenter. C row k col sum. If col k.

A i1 a in. CUDA multiple multiplication of the matrix in the kernel code. Const int newID0 get_group_id1TRANSPOSEY tx.

For example you can perform this operation with the transpose or conjugate transpose of. A jk M matrix multiplied by a kl N matrix results in a jl P matrix. X block_size_x threadIdx.

1 C C A c ij Xn k1 a ikb kj A. Example involving the preimage of a set under a transformation. Routine which calculates the product of double precision matrices.

Please count with me. Output newID1Q newID0 buffer tx ty. Int y blockIdx.

Step 3 You add all the values of the. Tiling in the local memory. Routine can perform several calculations.

We like building things on level 3BLAS routines. The most widely used is the.

Matrix Multiplication To Achieve Convolution Operation Programmer Sought

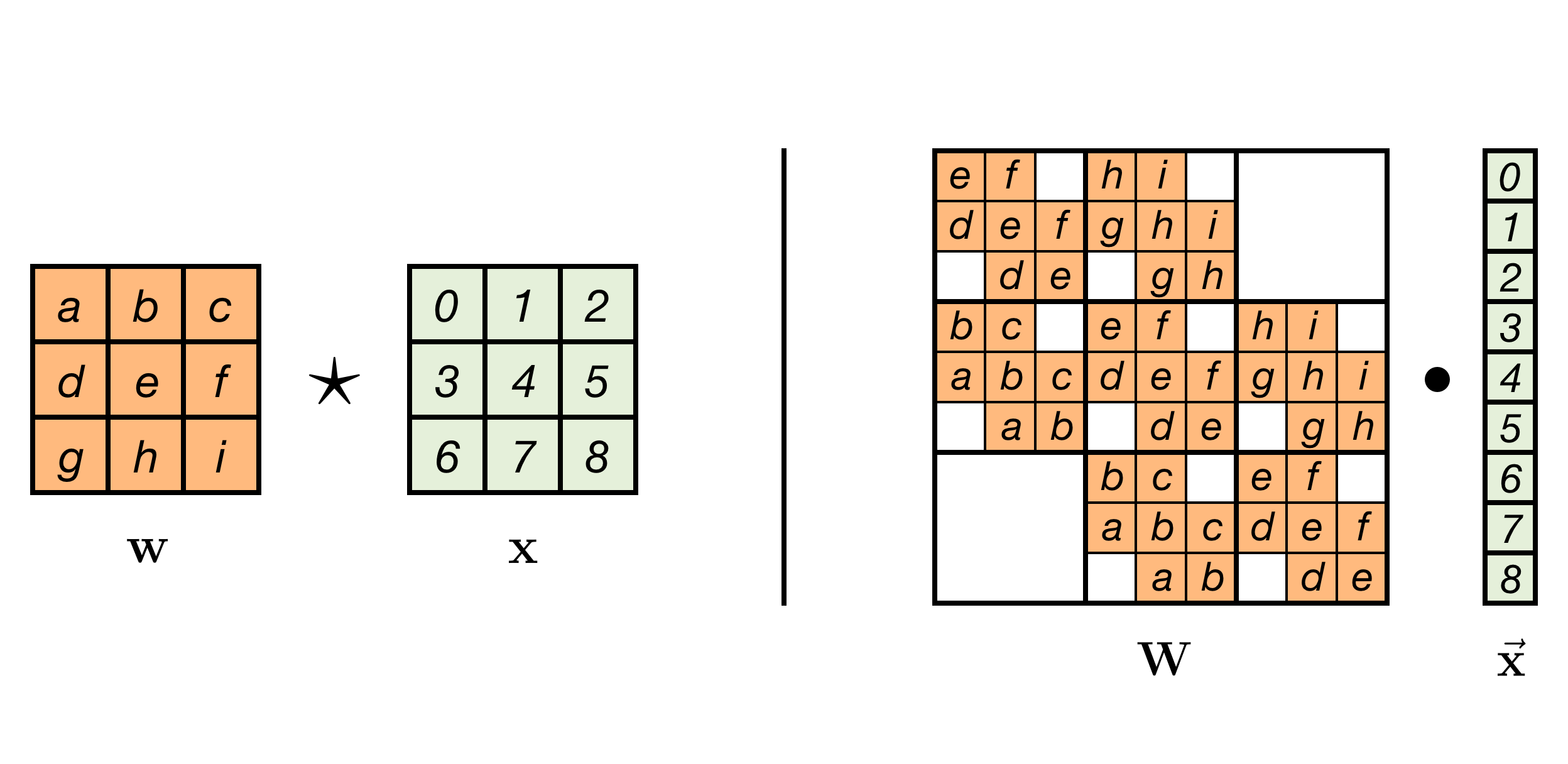

Convolution As Matrix Multiplication

2 D Convolution As A Matrix Matrix Multiplication Stack Overflow

Multiplication Kernel An Overview Sciencedirect Topics

Multiplication Kernel An Overview Sciencedirect Topics

Convolution As Matrix Multiplication

Invertible Convolutions Emiel Hoogeboom

Performing Convolution By Matrix Multiplication F Is Set To 3 In This Download Scientific Diagram

Types Of Convolution Kernels Simplified By Prakhar Ganesh Towards Data Science

Running A Parallel Matrix Multiplication Program Using Cuda On Futuregrid

An Example Of The Deconvolution Process Using Transpose Convolution In Download Scientific Diagram

2 D Convolution As A Matrix Matrix Multiplication Stack Overflow

Mapping Convolutions To Matrix Multiplication Operations Download Scientific Diagram

Why Gemm Is At The Heart Of Deep Learning Pete Warden S Blog

In A Convolutional Neural Network Cnn When Convolving The Image Is The Operation Used The Dot Product Or The Sum Of Element Wise Multiplication Cross Validated

Opencl Matrix Multiplication Sgemm Tutorial

Do The Filters In Deconvolution Layer Same As Filters In Convolution Data Science Stack Exchange

Multiplication Kernel An Overview Sciencedirect Topics