Cuda Fastest Matrix Multiplication

TILED Matrix Multiplication in CUDA by utilizing the lower latency higher bandwidth shared memory within GPU thread blocks. The formula used to calculate elements of d_P is.

Matrix Vector Multiplication In Cuda Benchmarking Performance Stack Overflow

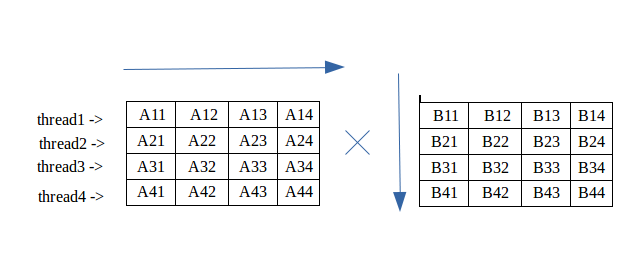

The idea is that this kernel is executed with one thread per element in the output matrix.

Cuda fastest matrix multiplication. Our first example will follow the above suggested algorithm in a second example we are going to significantly simplify the low level memory manipulation required by CUDA. Matrix Multiplication 11 Overview It has become increasingly common to see supercomputing applications harness the massive parallelism of graphics cards Graphics Processing Units or GPUs to speed up computations. Because I needed to manipulate the matrix multiplication I did not use CUBLAS for MM.

Free the memory allocated for a matrix. Each kernel computes the result element ij. Currently I made a neural networks program in the cuda c.

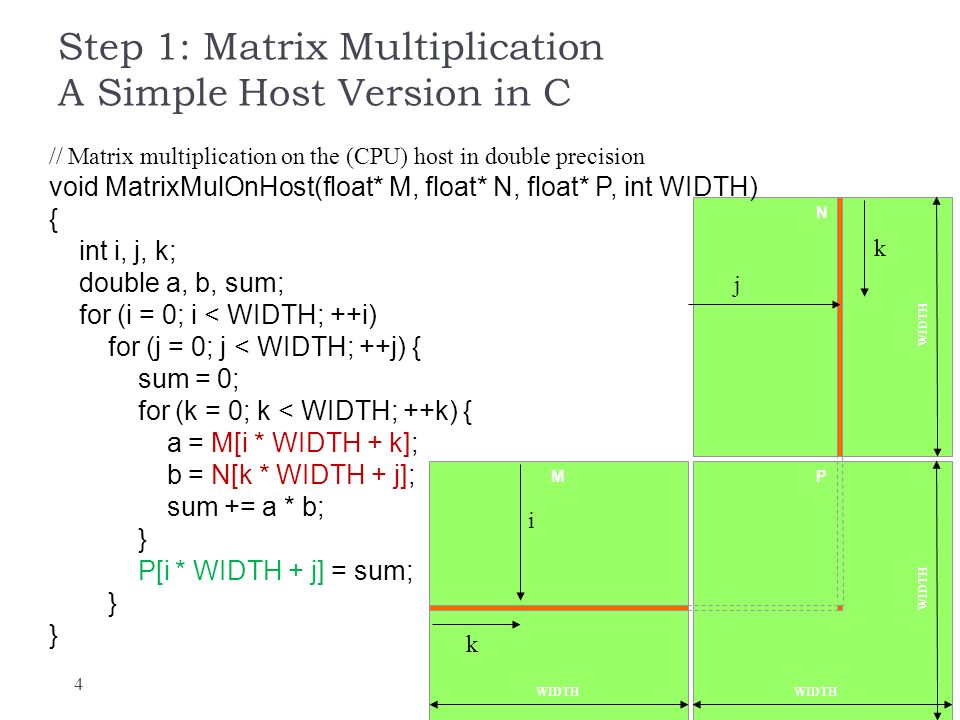

CUDA Matrix Multiplication Shared Memory CUDA Matrix Multiplication Code and Tutorial cuda matrix multiplication codecuda matrix multiplication tutorial. As such each thread ij iterates over the entire row i in matrix A and column j in matrix B. There are 4 different types of memory.

TILED Matrix Multiplication in CUDA by using Shared Constant Memory. I use the following code for MM. Do not load all at one time.

I use the following code for MM. Run make to build the executable of this file. A hierarchy of thread groups shared memory and thread synchronization.

When constructing cuDNN we began with our high-performance implementations of general matrix multiplication GEMM in the cuBLAS library supplementing and tailoring them to efficiently compute convolution. A d_P element calculated by a thread is in blockIdxyblockDimythreadIdxy row and blockIdxxblockDimxthreadIdxx column. This is the.

Currently I made a neural networks program in the cuda c. A typical approach to this will be to create three arrays on CPU the host in CUDA terminology initialize them copy the arrays on GPU the device on CUDA terminology do the actual matrix multiplication on GPU and finally copy the result on CPU. Matrix multiplication between a IxJ matrix d_M and JxK matrix d_N produces a matrix d_P with dimensions IxK.

Load these sub-matrices by block sub-sub-matrices of size BLOCK_SIZE BLOCK_SIZE. This is the. Matrix Multiplication code on GPU with CUDA.

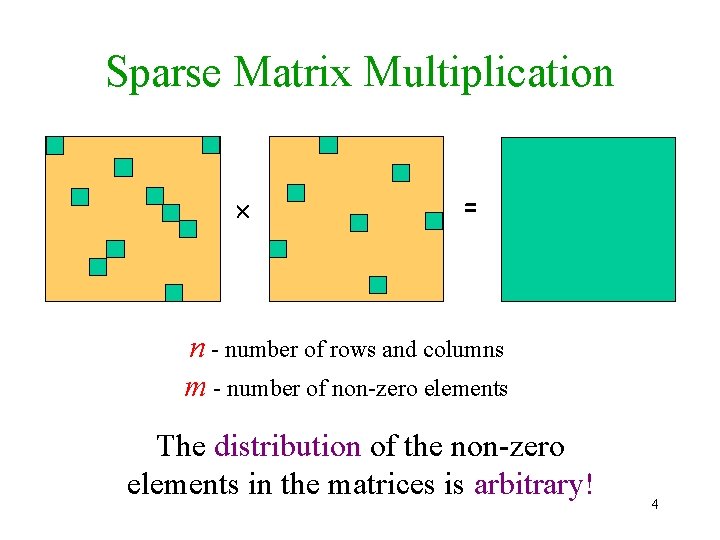

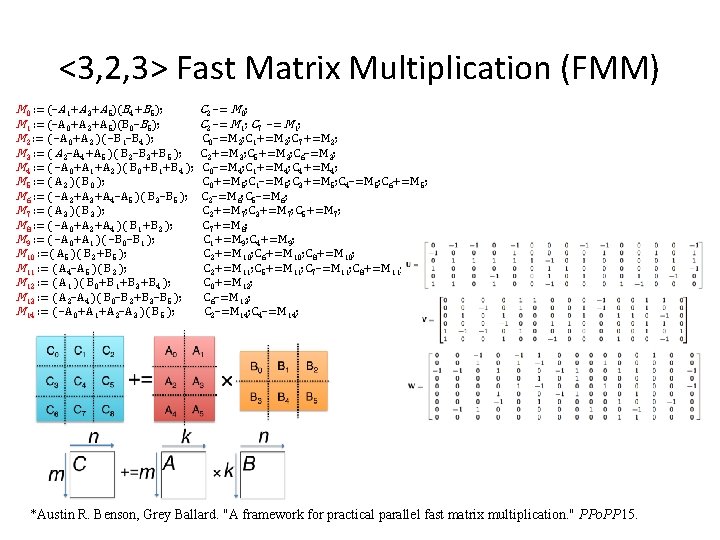

In my CUDA Program Structure post I mentioned that CUDA provides three abstractions. Matrix multiplication is also the core routine when computing convolutions based on Fast Fourier Transforms FFT 2 or the Winograd approach 3. For debugging run make dbg1 to build a debuggable version of the executable binary.

Matrix Multiplication in CUDA by using TILES. I was wondering if any one has some advice to make it faster which can be very helpful since I need to use MM millions of times during learning. I was wondering if any one has some advice to make it faster which can be very helpful since I need to use MM millions of times during learning.

Because I needed to manipulate the matrix multiplication I did not use CUBLAS for MM. One platform for doing so is NVIDIAs Compute Uni ed Device Architecture or CUDA. We have already covered the hierarchy of thread groups in Matrix Multiplication 1 and Matrix Multiplication 2In this posting we will cover shared memory and thread synchronization.

The above sequence is arranged in the increasing order of efficiency performance 1st being the slowest and 5th is the most efficient fastest. Well start with a very simple kernel for performing a matrix multiplication in CUDA.

Multiplication Of Matrix Using Threads Geeksforgeeks

Github Kberkay Cuda Matrix Multiplication Matrix Multiplication On Gpu Using Shared Memory Considering Coalescing And Bank Conflicts

General Matrix To Matrix Multiplication Between The Current Hsi Data Download Scientific Diagram

Matrix Multiplication Is A Key Computation Within Many Scientific Applications Particularly Those In Deep Learning Many Operations In Modern Deep Neural Netwo

Pdf Cuda Memory Techniques For Matrix Multiplication On Quadro 4000

Matrix Multiplication Cuda Eca Gpu 2018 2019

Cutlass Fast Linear Algebra In Cuda C Nvidia Developer Blog

Fast Sparse Matrix Multiplication Raphael Yuster Haifa University

Matrix Multiplication Cuda Eca Gpu 2018 2019

Assignment Of Computation In Matrix Multiplication Download Scientific Diagram

Generating Families Of Practical Fast Matrix Multiplication Algorithms

Matrix Multiplication In Cuda Ppt Download

Partial Kernel Codes For Matrix Multiplication Cuda Keywords Are Bold Download Scientific Diagram

Matrix Multiplication Cuda Eca Gpu 2018 2019

Matrix Vector Multiplication In Cuda Benchmarking Performance Stack Overflow

Cutlass Fast Linear Algebra In Cuda C Nvidia Developer Blog

Why Is Numpy Dot As Fast As These Gpu Implementations Of Matrix Multiplication Stack Overflow

Sparse Matrix Multiplication Description By Glyn Liu Medium

Parallel Matrix Multiplication C Parallel Processing By Roshan Alwis Tech Vision Medium